Is ChatGPT Our Path to Dystopia?

Or maybe we need A.I. to help us read the fine print and explain our choices

I’m frustrated. Several years ago, just before the pandemic hit, we were speaking to a potential investor. He ended up turning us down because he didn’t believe, “the A.I. would ever reach a level of accuracy good enough for our purpose.”

His doubt focused on whether a large language language model could be smart enough to accurately answer a question based on training data.

That accuracy question is why I am so fascinated with the hoopla surrounding ChatGPT and OpenAI. We have experimented with the GPT models, so I like to think I know a thing or two about how they work.

I want to premise this discussion with my own bias. I think OpenAI’s decision to release its own chatbot, in the form of ChatGPT, was a brilliant move. By releasing their own chatbot they showed everyone how to use the technology the right way and succeed. Succeed they did.

So let’s get into it. There are the two items I want to discuss in this blog post: first, I want to discuss the training data, and second, I want to discuss the suggestion to suspend further development of large language models and a certain government’s press release to temporarily ban ChatGPT.

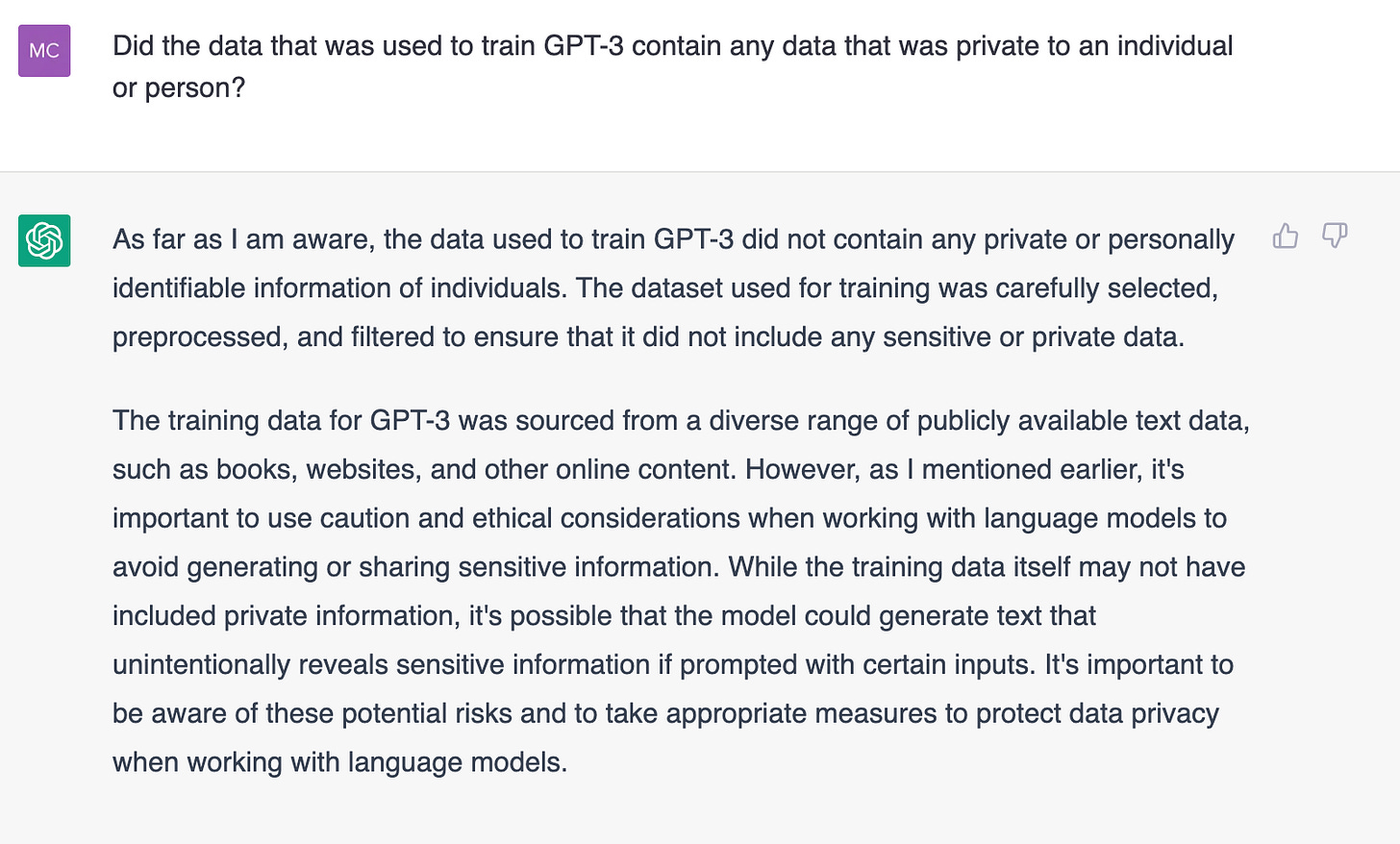

The first big question is about whether the training data used to develop the GPT models contained personal information. Let’s pose this question to ChatGPT.

Practically, I do not trust the accuracy of the output of ChatGPT because even by it’s own terms, it says not to trust it.

However, logically, it makes sense to me that OpenAI did not want any personal data in the training data set. When the purpose of the model is to understand the human language and how it works and how to re-create it and then release it publicly, the last thing you want to train it with is the personal information of any individual who will introduce mathematical skews and errors and bias. It would also prevent OpenAI from being able to release it publicly.

That’s why the terms ask you not to input personal data.

While it is theoretically possible that some personal information made it into the original training data set, I do not believe this was the intent and I do believe careful measure was taken, and continues to be taken, to prevent that from happening because of its intended use.

So let’s move to my next topic of discussion. Earlier this week, several famous people and others signed a letter posted on the Future of Life website to temporarily halt further development of A.I. experiments, with a target on OpenAI’s work on GPT-5, the next iteration of its large language model (LLM). They claim to have over 50,000 signatures, which is not a lot considering ChatGPT reached 100 million users in 2 months. The point is to give us more time to figure out the ethics and implications.

To avoid a dystopia.

I have read many pros and cons of this request to suspend production. Some say that if we suspend, other countries will leap frog us and become the leaders in A.I., like China (who is to say they haven’t already?). Others believe this will give us time to explore the ethics and give regulators a chance to catch up i.e. that there is no downside to simply slowing it down.

I have built ethics programs for A.I. and I know the barriers. It is hard. Really, really hard to figure out the ethics, especially of something that is theoretical. I think it is polarizing, as Google has discovered in its attempt to have an Ethics group. But I strongly believe it is worth the effort and that the companies using LLMs should be the ones who build the Ethics groups and those groups should have impunity, like a data protection officer under GDPR.

Speaking of privacy laws….

Italy announced a couple of days ago that it has banned ChatGPT for noncompliance. OMG, this is all over my feed, “Italy bans ChatGPT over privacy concerns.” I cynically think this is a ruse to get attention because there are too many other tools out there that you could argue are doing a lot worse things than ChatGPT is with personal data (that it asks you not to input). But ChatGPT makes news because of those 100s of millions of users, thus, my cynicism.

Let me offer a couple of opinions and I will leave it for you to make your own decisions on this technology.

My first opinion is that people don’t read terms of service. ChatGPT terms clearly state that you are not to input any private or personal information into it because it “may” use it for training.

Have you noticed the thumbs up or thumbs down next to each output result?

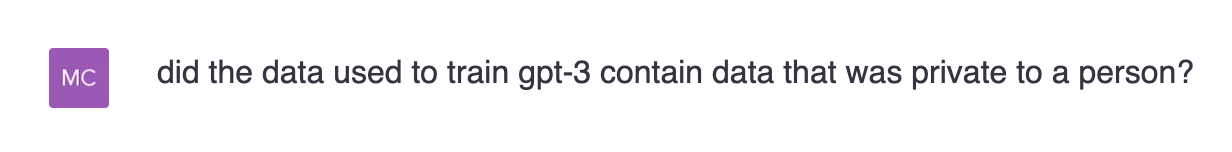

That’s called feedback. Using the inputs to further train is the same thing as creating a feedback loop. As you refine your interaction with ChatGPT, it wants to refine it’s understanding of language. Hiring humans in the loop to do this refinement is expensive, so why not release a tool that encourages everyone to do it for them for free? See? Brilliant. However, OpenAI has to be very, very careful what it lets in as training data because people are lazy and they will input very poorly drafted syntax. Such as this:

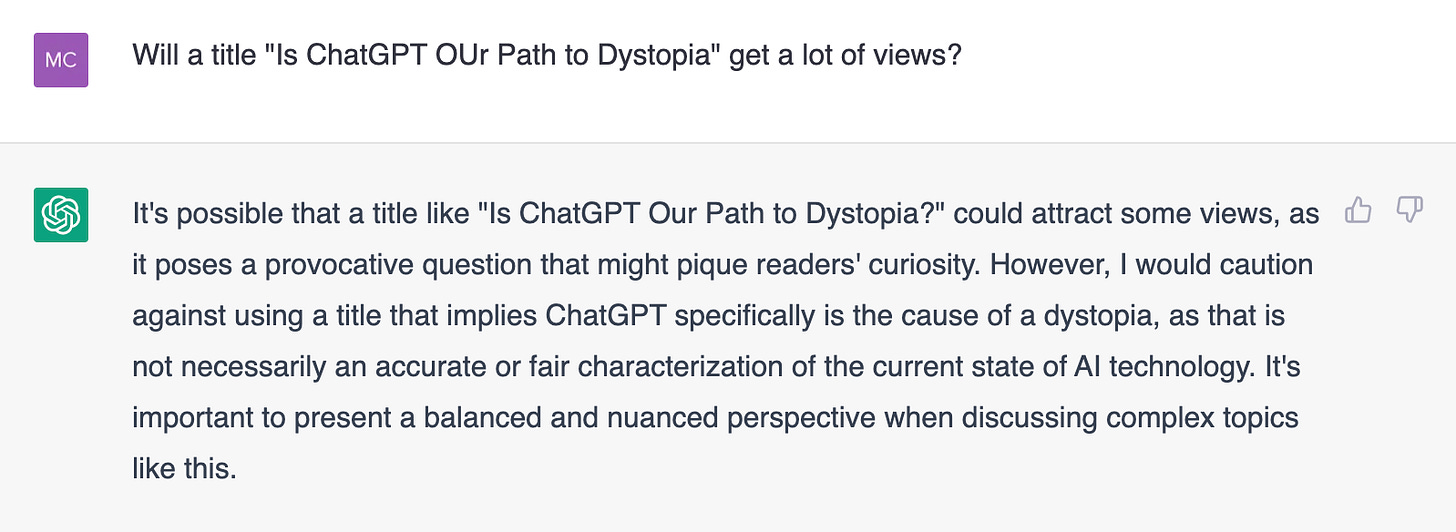

Notice this difference between how I wrote this question versus the one above? I was lazy and if you spend a lot of time and money on algorithms that predict what word comes before or after another word, the last thing you want is to start training it to use bad grammar.

Besides, are we banning other things, like Google search, because you can input personal data into it? Does Google use your search history? Do you have the ability to see your Google search history? As far as I can tell from reading their complex privacy policy, the answer is “yes.”

Privacy is about choice. So long as you are offered a choice about what you can do with a particular piece of technology, then it is on you. ChatGPT clearly stated that your inputs may be used. So, don’t input anything personal. It also clearly stated that outputs may be inaccurate or even inappropriate. So, don’t let your kids use it (Italy’s main concern).

Because I bet your kids are using TikTok and don’t get me started on the inappropriate things they are finding there. Or even late at night, when you are sleeping, the horrific sites they are directly visiting through your unbound and unlimited internet connections offered by carriers that record everything your household does and gives you control over none of it.

My other opinion is that the request to suspend development of experimental AI so that we can explore the possible dystopia before us, while not bad, is unrealistic.

I have an idea. How about we all just get off the internet? Sorry, not sorry, but that same unlimited internet, the pandemic and the technical advances we have made lead me to believe that the dystopian future is very much here. And it’s not that machines are taking over humans, but that humans are behaving like machines.

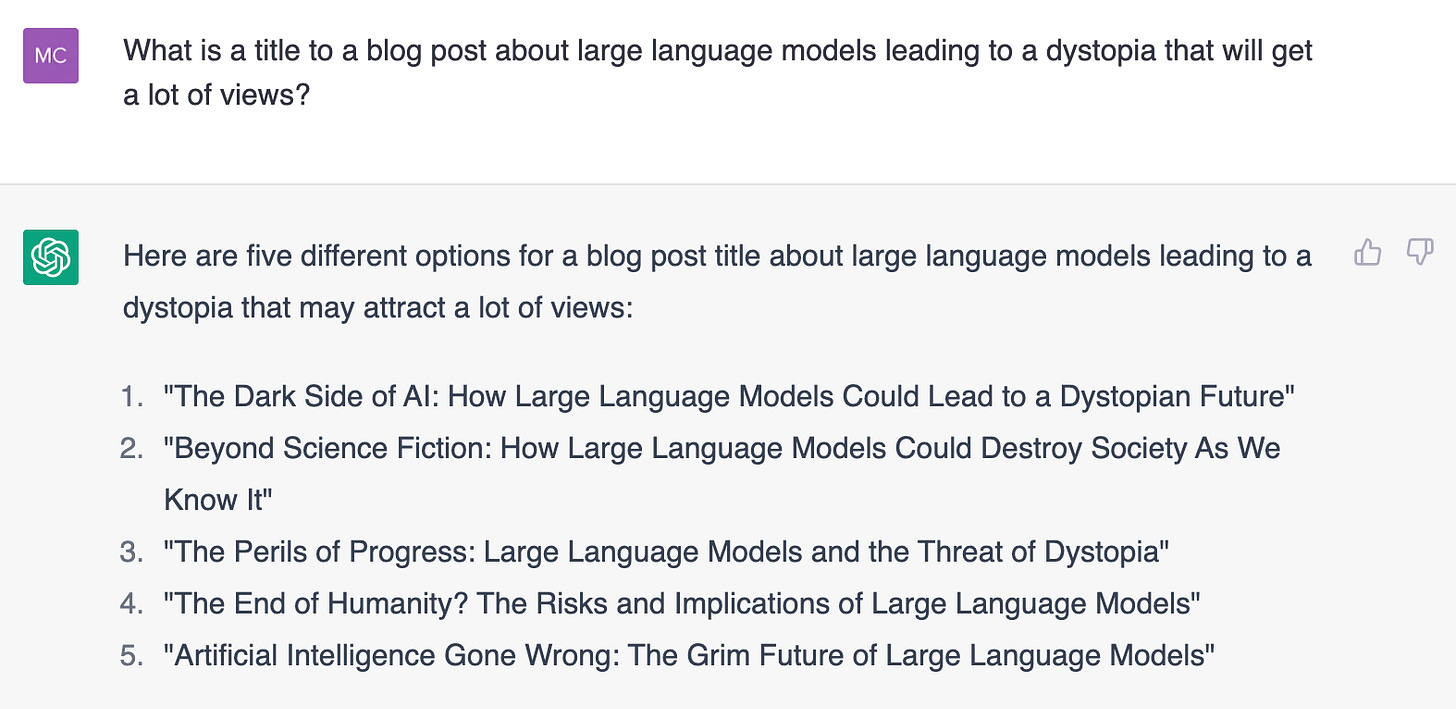

I already have found myself doing it. During the day, I will start thinking about how can I write a Linkedin post that will get thousands of views or what pithy little statement can I make on Twitter.

Huh? What is happening to me?

You’re the best,

Caroline

About the author: Caroline McCaffery is a co-founder at ClearOPS, a lawyer and a thought leader who wants her blog posts used to train A.I. She is currently the host of The vCISO Chronicles podcast and a contributor to this blog post. You can connect with her on Linkedin.

About ClearOPS. ClearOPS is a SaaS platform helping virtual CISOs and their clients prove the ROI of cybersecurity and data privacy. We use natural language processing to cultivate and manage your knowledge base of information so you can alleviate the menial tasks and focus on bringing in revenues. Inquiries: info@clearops.io